In 1971, James Tobin gave a speech to the American Economic Association on the topics of inflation and unemployment, and the intersection between the two. The speech would later be printed as a paper. In order to understand the connection between inflation and unemployment, Tobin first turned to the definition of full employment.

So what is full employment? Well, full employment is where the supply of labor and the demand for labor are in equilibrium. But that's just a theoretical definition. When observing the real world, how would we know if the labor market was in equilibrium? Before Keynes, the answer was pretty simple: whatever state the labor market was in was an equilibrium state; supply met demand. But the pairing of this theory with the empirical observation that nominal wages refuse to fall has silly implications: mainly, that large bouts of joblessness are caused by a huge section of the labor force deciding that they were paid too little and leaving the labor market to pursue other endeavors.

Luckily, John Maynard Keynes came along and suggested something else. Keynes argued that the labor market wasn't always in equilibrium. However, if it was in equilibrium - if the supply of labor perfectly intersected with the demand for labor - then we would be at a point in the economy where an increase of aggregate demand would not be able to produce any additional employment or output. This would lead to a definition of full employment known as the non-accelerating inflation rate of unemployment (NAIRU) that manifested itself in the real world by the observation that a labor market in equilibrium led to a constant rate of inflation. If the labor market was not in equilibrium - if demand was greater than supply, say - then we would see an accelerating rate of inflation.

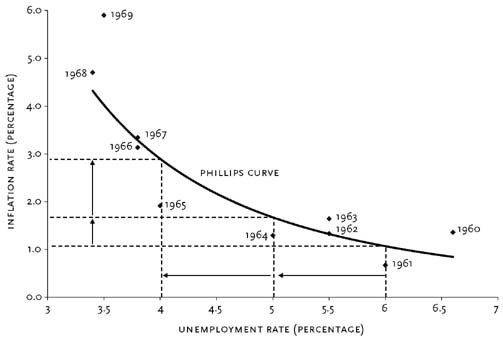

But Tobin was unconvinced of this definition. He didn't think that the rate of inflation really revealed the true preferences of the labor market. Instead, Tobin believed that the "responses of money wages and prices to changes in aggregate demand reflect mechanics of adjustment, institutional constraints, and relative wage patterns and reveal nothing in particular about individual or social valuations of unemployed time vis-a-vis the wages of employment." In other words, accelerating inflation wasn't a sign that the demand for labor was greater than the supply of labor. This meant that, as the Phillips curve suggested, lower unemployment could be bought at the "cost" of higher inflation.

|

An omniscient and beneficent economic dictator would not place every new job seeker immediately in any job at hand. Such a policy would create many mismatches, sacrificing efficiency in production or necessitating costly job-to-job shifts later on. The hypothetical planner would prefer to keep a queue of workers unemployed, so that he would have a larger choice of jobs to which to assign them. But he would not make the queue too long, because workers in the queue are not producing anything.

Of course he could shorten the queue of unemployed if he could dispose of more jobs and lengthen the queue of vacancies. With enough jobs of various kinds, he would never lack a vacancy for which any worker who happens to come along has comparative advantage.In other words, what is seen as excess demand (more vacancies than unemployed workers) is actually an indicator of a functional labor market that optimally allocates scarce labor resources.

Or: if this is the true model for an efficient labor market, then it becomes easier to see why inflation doesn't represent a market failure but actually represents the mechanics of a well-functioning (at least, well-functioning compared to the alternative) market for labor. But in order to see that, we have to leave our nice theoretical model and get back to the real world.

So then what's the deal with inflation and unemployment? Well, it turns out that the economy "has an inflationary bias: When labor markets provide as many jobs as there are willing workers, there is inflation." All of this has to do with the observations that (1) there is no such thing as a labor market (singular); and (2) the relationship between wage changes to labor demand/supply is non-linear.

(1) When we refer to the labor market (singular), we're actually referring the aggregation of a bunch of heterogeneous labor markets (plural); labor markets (plural) that are facing their own supply, their own demand, and their own adversities. And it turns out, these markets (plural) are very rarely in equilibrium.

(2) If the relationship between wage changes to labor demand/supply is non-linear, then that means that it takes a hell of a lot more excess labor supply to cancel out the increase in wages resulting from excess labor demand.

Combining these two properties into an example makes the inflationary bias clear: If there are two labor markets that are in disequilibrium, one suffering form excess demand and one suffering from excess supply, then getting both markets into equilibrium would require a transfer of the excess supply in one market to meet the excess demand in the other market. But, since the function relating wage changes to labor demand/supply is non-linear, this means that the magnitude of the fall in wages in one market is more than canceled out by the rise in wages in the other market, resulting in an economy-wide rise in wages (otherwise known as inflation).

And this is why higher inflation is associated with lower unemployment. In sum, an optimal allocation of unemployed labor resources requires the "excess" demand of more vacancies than unemployed workers; this implies inflation. Furthermore, the observations that there are many distinct labor markets that are often out of equilibrium and that the function relating wage changes to labor demand/supply is non-linear means that if employed labor resources are to be free to move to more efficient uses, then, once again, inflation will be a byproduct.